This is the first in a two-part series reviewing and recapping DORA’s two 10th Anniversary plenary sessions (watch the recording of both sessions here).

An Indonesian translation of this blog was created by panelist Dasapta Erwin Irawan and can be found here.

The Declaration on Research Assessment (DORA)’s 10th-anniversary milestone provided an opportunity to reflect on its remarkable journey, its impact on research assessment practices, and its ongoing relevance in shaping the future of research evaluation. As part of the DORA at 10 celebrations, the Asia-Pacific Plenary session was dedicated to gauging the progression and roadmap for responsible research assessment in the Asia-Pacific. The event started with opening remarks from Ginny Barbour, DORA Vice-Chair and Director of Open Access Australasia, Australia, and one of the Founding Editors of PLoS Medicine. The opening remarks were followed by a keynote presentation from Mai Har Sham, Pro-Vice-Chancellor/Vice President (Research) and Choh-Ming Li Professor of Biomedical Sciences at The Chinese University of Hong Kong. The event concluded with a panel discussion with Dasapta Erwin Irawan, Bandung Institute of Technology, Indonesia; Moumita Koley, Indian Institute of Science, India; Spencer Lilley, Victoria University of Wellington, New Zealand; and Yu Sasaki, Kyoto University Research Administration Center, Japan. DORA’s Acting Program Director, Haley Hazlett, shared closing remarks at the end of the session. Chris Hartgerink, Liberate Science, and Sudeepa Nandi, DORA Policy Associate, provided technical support during the session.

Opening remarks: Looking back and looking forward

Ginny Barbour discussed the evolution of DORA from its inception to its future visions. Barbour started by recounting when she realized the limitations of aggregate journal assessment metrics, like the Journal Impact Factor (JIF) while working at PLoS Medicine. Their editorial article “The Impact Factor Game” shed light on the dissatisfaction regarding the inadequacy of JIF in capturing all the efforts that go into bringing out important works done in the field. Their discontent resonated with the wider scientific community. So, with the common goal of mitigating the negative impacts of flawed and inappropriate assessment metrics on the academic ecosystem, publishers, researchers, and members of learned societies came together and conceived of DORA at the 2012 ASCB conference in San Francisco and officially launched DORA the following year (2013). With support from many international funders and individual institutions, and collaborations with world-wide initiatives on different projects like Narrative CV, Project TARA, etc. DORA evolved into a full-fledged global initiative facilitating open dialogues, building tools and resources, and guiding and supporting the scientific community with practical ways to reform the research assessment system. Barbour highlighted how over the years, DORA made conscious efforts to enhance its own inclusivity and today also has international and multi-disciplinary representation in its own governance. Details about the significant milestones and activities that have shaped DORA’s progress can be found in the recent retrospective blog.

Keynote session: Research Assessment from 2013-2023

Mai Har Sham reviewed the research assessment landscape in the Asia-Pacific region and explored the challenges and possibilities in this part of the world. Sham highlighted the obstacles faced in implementing DORA and enabling reforms in research assessment despite an increasing number of signatories.

The research ecosystem is a complex interconnected network that relies not only on intra-institutional relationships but also on inter-organizational ones for funding, cross-disciplinary collaborations, academia-industry partnerships, etc. These professional relationships depend on research assessment at both individual and institutional levels. Therefore, it is crucial to choose appropriate performance indicators for individuals (influencing job promotion, tenure, grants, and rewards) and for institutions (impacting reputation, funding allocation, partnerships and research strategy).

Particularly, for universities and research institutions, performance indicators encompass various components, including research outputs (publications, citation impacts, patents, etc.), research income (grants, industry collaborations, spin-off companies, and patent licensing), awards received by professors and students, reputations (research impact, student and trainee outcomes, etc.), and their world rankings. Despite the plethora of attributes that could be captured to assess institutional performances, there are clear biases favoring certain outcomes and a lack of systematic assessment of all components. In 2010 and 2014, large-scale research assessment exercises like Excellence in Research for Australia (ERA), and Hong Kong Research Assessment Exercise (RAE), conducted by funding bodies, Australian Research Council (ARC) and Research Grants Council (RGC) respectively, were launched. Initially their primary focus was on publication-based metrics to assess research outputs. However, there has been a gradual shift in the outlook on research assessment, driven by active advocacy mediated by DORA, the Leiden Manifesto, and the Hong Kong Principles. Large-scale assessment exercises are now expanding their evaluation criteria to include research impact and environment along with research output, assigning varying degrees of importance to these factors. Although the Hong Kong Principles and others recommend evaluating the entire research process, from inception, designing, and procedure to dissemination and impact, in practice it is still far from being actualized in the large-scale assessment exercises.

The evolving scenario, albeit slow-paced, brings hope for the future. Sham clarified that the shift would not happen radically, mainly because it would be necessary to overcome several barriers unique to this region before achieving a larger-scale movement. While it will be important to get institutions, funding agencies, and governments to play a crucial role in defining assessment criteria, aligning incentives and providing resources for systemic changes, engaging researchers, considering their perspectives, and building trust in alternative assessment processes, would also be key. However, resistance from individuals and institutions has complicated matters. In addition, Sham outlined several challenges specific to the Asia-Pacific context: 1) low levels of awareness, 2) the absence of determined leaders to drive change and build policies and infrastructure, 3) a lack of availability of working models, guidance, and alternatives for recognizing broader research contributions, 4) technical implementation challenges, 5) limited support for open scholarship, and last but most importantly 6) issues related to regional diversity and inclusivity, such as differences in funding agencies, policies, and cultural factors. Therefore, it is important to acknowledge and address these regional contexts and difficulties.

In closing, Sham noted the Asia-Pacific region has made progress but continued efforts are necessary to enhance research assessment practices and promote trustworthiness in new approaches. During the Q&A session, Sham discussed topics ranging from transparency in University Rankings, research procedures, and peer review, especially emphasizing the importance of transparent peer review and open scholarship in improving research assessment. Furthermore, she also noted the need to eliminate biases in the review process in this region and provide fair, adequate, and accessible training and support for responsible and transparent reviews.

Panel discussion: On the present and future of responsible research assessment

Research assessment in different countries has its own unique characteristics and challenges. Following the keynote, panelists Spencer Lilley (Victoria University of Wellington, New Zealand), Yu Sasaki (Kyoto University Research Administration Center, Japan), Dasapta Erwin Irawan (Bandung Institute of Technology, Indonesia) and Moumita Koley (Indian Institute of Science, India) overviewed their experiences and perspectives on research assessment. They also discussed the key actors, barriers, and future directions in the adoption of DORA for implementing research assessment reforms in their respective countries. Overall, all the countries in the picture had varying levels of dependence on quantitative publication-based metrics for funding allocations, institutional reputations, etc and are looking forward to changing.

Lilley told us about the Performance-Based Research Fund (PBRF) system that has been in place for over 20 years in Aotearoa New Zealand. PBRF assessments are based on individual performances, making it critical for researchers to strive to “achieve” well in order to sustain and maximize their institutional research funding. However, looking ahead, the upcoming round of assessments in New Zealand will prioritize identifying research excellence beyond just relying on impact factors. Particularly, there will be efforts towards being more inclusive of the Māori and other Pacific peoples aiming to support indigenous peoples in the research workforce and on knowledge generation relevant to indigenous communities. Lilley identified the gap in understanding cultural complexities and relevance in the peer review processes as one of the barriers to overcome thus emphasizing on the need for awareness and training amongst the editorial boards and peer reviewers to necessarily understand indigenous research effectively. Additionally, Open Science is also gaining importance, with external funders requiring funded research to be openly accessible. At the national level, the main actors behind the shift for a better research culture, through research assessment changes and open access, are the national funding agencies, which are very recently progressing towards incorporating Narrative CVs, etc. However, there is still scope for easing the process for marginalized and indigenous researchers.

In Japan, as highlighted by Sasaki, quantitative metrics play a dominant role, often used for university rankings and strategic management purposes. Despite some awareness of responsible research assessment initiatives like DORA, there remains an outsized focus on bibliometric indicators for performance evaluations. Sasaki also noted that a low level of awareness about DORA among administrative staff indicated the need for better engagement. Efforts supported by the DORA Community Engagement Grant project have focused on developing an issue map to understand the challenges in reforming research assessment in Japan. However, this map requires ongoing engagement, and the map itself is expected to become a common platform to bring together researchers, research administrators, and funders to open dialogues on the necessity of fostering responsible research assessment.

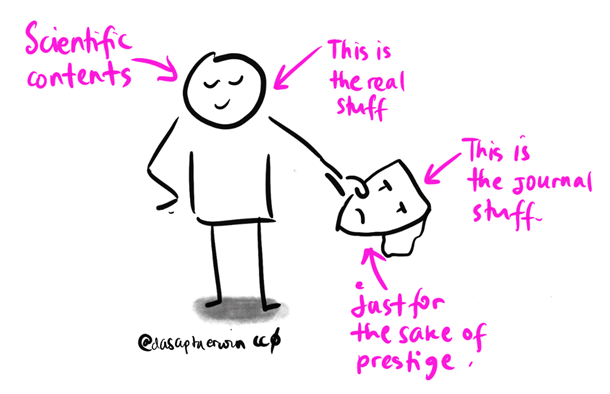

According to Irawan, although research assessment in Indonesia heavily depends on quantitative metrics such as citations and journal impact factors. However, with the drive for Open Science promotions there is a growing awareness among researchers about the importance of sharing research content and materials widely. While DORA’s guidance has evidently impacted in broadening perspectives at the grassroots level, the government funders’ slow pace of change poses a major barrier to effective research assessment reform at the national level.

Image Credit: Dasapta Erwin Irawan

Koley, also a DORA Community Engagement Grant recipient, shared their project findings that research assessment in India involves a mix of qualitative and quantitative metrics. While most institutions in India heavily rely on quantitative metrics such as impact factors and citation counts, only a handful of institutions prioritize qualitative evaluations. The national ranking framework incorporates bibliometric parameters focusing exclusively on Web of Science and Scopus indexed journals, but efforts are being made to make the assessment framework more responsible and transparent. There is increasing awareness of the limitations of relying solely on quantitative metrics. Engagement of diverse stakeholders, including researchers, faculties, and funding agencies, has been crucial in raising awareness about responsible research assessment and promoting discussions for improvement. In India, senior science leaders and researchers play the key role in setting the research culture, where disciplinary contexts contribute significantly.

In the future, while Lilley would like more advocacy for greater transparency, reduced competition, and increased collaboration in research, with an emphasis on the scientific community valuing and supporting indigenous research, Sasaki expressed the dire need for funders and research administrators as agents of change against the obstacles posed by the enduring impacts of JIFs and university rankings. Irawan hoped to encourage removing the “prestige” masks and focusing on the quality and content of research work instead of journal-based metrics, and Koley envisioned a comprehensive and robust research assessment framework that balances qualitative and quantitative metrics, promotes diversity and inclusivity, and brings social impact.

“greater transparency, reduced competition, and increased collaboration in research, with an emphasis on the scientific community valuing and supporting indigenous research”

Spencer Lilley

Conclusion

This event, while celebrating the traversed path and impact of DORA in the last 10 years, also featured the importance of engaging all stakeholders in the scientific community, from different parts of the globe with their different perspectives and needs, to successfully bring global research assessment reformation goals to fruition in the coming years.

Reading List

Non-DORA resources referenced or shared during the call:

- The PLoS Medicine Editors (2006). The Impact Factor Game. https://doi.org/10.1371/journal.pmed.0030291

- Hicks D, Wouters P, Waltman L, Rijcke S. de, Rafols I (2015). Bibliometrics: The Leiden Manifesto for research metrics. https://doi.org/10.1038/520429a

- Moher D, Bouter L, Kleinert S, Glasziou P, Sham MH, Barbour V, Coriat AM, Foeger N, Dirnagl U (2020). The Hong Kong Principles for assessing researchers: Fostering research integrity. https://doi.org/10.1371/journal.pbio.3000737

- Research Excellence Framework: https://www.ukri.org/about-us/research-england/research-excellence/research-excellence-framework/

- Hong Kong Research Assessment Exercise: https://www.ugc.edu.hk/eng/ugc/activity/research/rae/rae2020.html

- Excellence in Research for Australia: https://www.arc.gov.au/evaluating-research/excellence-research-australia

- Nosek, Brian A et al. (2023). Transparency and Openness Promotion (TOP) Guidelines. https://osf.io/9f6gx/?view_only=

- Guidance for research organisations on how to implement responsible and fair approaches for research assessment: https://wellcome.org/grant-funding/guidance/open-access-guidance/research-organisations-how-implement-responsible-and-fair-approaches-research

- Japan Inter-institutional Network for Social Sciences, Humanities, and Arts (JINSHA) Issue Map on Research Assessment in Humanities and Social Sciences: https://www.kura.kyoto-u.ac.jp/en/act/20220900/

DORA resources:

- The Declaration on Research Assessment: https://sfdora.org/read/

- Fritch R, Hatch A, Hazlett H, and Vinkenburg C. (2021). Using Narrative CVs. https://zenodo.org/record/5799414#.YeM-41lOlPY

- Ginny Barbour and Haley Hazlett (2023). From declaration to global initiative: a decade of DORA. https://sfdora.org/2023/05/18/from-declaration-to-global-initiative-a-decade-of-dora/

- Anna Hatch, Ginny Barbour and Stephen Curry (2020). The intersections between DORA, open scholarship, and equity. https://sfdora.org/2020/08/18/the-intersections-between-dora-open-scholarship-and-equity/

- Haley Hazlett and Stephen Curry (2023). Towards the future of responsible research assessment: Announcing DORA’s new three-year strategic plan. https://sfdora.org/2023/03/29/towards-the-future-of-responsible-research-assessment-announcing-doras-new-three-year-strategic-plan/

- DORA Tools in Resource Library: https://sfdora.org/resource-library/?_resource_type=tools&_dora_produced=1

- Futaba Fujikawa and Yu Sasaki (2023). Creating a Platform for Dialogues on Responsible Research Assessment: The Issue Map on Research Assessment of Humanities and Social Sciences. https://sfdora.org/2023/02/16/creating-a-platform-for-dialogues-on-responsible-research-assessment-the-issue-map-on-research-assessment-of-humanities-and-social-sciences/

- Suchiradipta Bhattacharjee and Moumita Koley (2023). Exploring the Current Practices in Research Assessment within Indian Academia. https://sfdora.org/2023/02/16/community-engagement-grant-report-exploring-the-current-practices-in-research-assessment-within-indian-academia/

Sudeepa Nandi is DORA’s Policy Associate