Guest post by Iratxe Puebla, from Make Data Count.

The majority of research activities involve creating, collecting, or using data. While datasets constitute the backbone of research activities, they are currently sidelined as part of research evaluation frameworks which predominantly focus on article publications. This is a missed opportunity in evaluation: Recognition for datasets is an important step to highlight the breadth of outputs arising from research activities, and to create opportunities to recognize vital contributions like data curation and enrichment. This post highlights the alignment of Make Data Count and DORA’s work on responsible research assessment, and how our activities support recognition of diverse scholarly outputs.

Make Data Count believes that data deserve their place as part of tenure, promotion, and research evaluation. We are a community initiative that works to advance the tools and practices necessary for meaningful data evaluation and responsible data metrics. Our approach to responsible metrics aligns with DORA’s: Responsible metrics should include a range of indicators (both qualitative and quantitative) that are contextualized so that they can be interpreted in specific evaluation settings. In addition, data metrics should be auditable, that is, built on open data that can be scrutinized and is accessible to the community.

Incorporating data into the ways we evaluate research activities is not only a way of reflecting the importance of data, but also an essential step for responsible research assessment. A key motivation behind efforts to reform research evaluation is to move away from oversimplified metrics with a narrow focus on publication volume, so that we can gain a broader view into the rigor, integrity and reach of researchers’ contributions. This is not possible if the evaluation remains limited to the number of journal articles.

Researchers produce and share data across the research process, along with other outputs such as project plans, protocols, code and many others. Incorporating these diverse outputs in research assessment aligns with principles of open scholarship, but also provides signals about the rigor, integrity, and community value of the research undertaken. Data and other open outputs shared across the research process enable community engagement and corrections, traceability for the research activities, and protection against some of the recent publication ethics challenges related to fake publications produced for CV padding. By reporting their data contributions and how the community engaged with the data, researchers are showcasing their commitment to transparent and rigorous research practices that also open the door to a broader range of collaborations.

The building blocks for robust and responsible data evaluation

While many may agree on the benefits, challenges can arise when we get to the specifics of implementation. What does including data in the evaluation of research and researchers actually look like in practice? In the simplest terms, this means encouraging researchers to report their data contributions, ensuring that review committees include relevant expertise and have guidance on how to assess data, and articulating what qualitative summary or quantitative measures of usage should be reported, supported by reliable open sources of information.

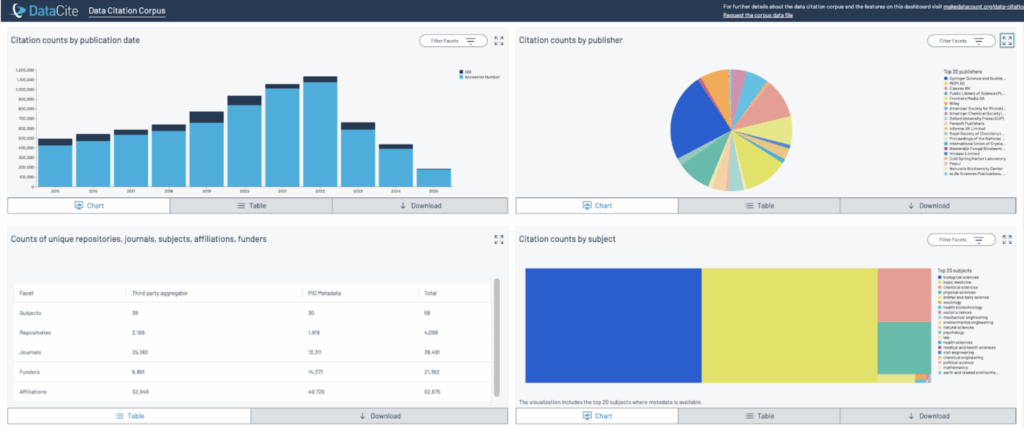

Over the last ten years, Make Data Count has been working on the building blocks to make these steps possible. We have developed infrastructure, workflows and standards to enable repositories, data platforms and journals to collect, standardize and share information about the use of datasets. We developed the COUNTER Code of Practice for Research Data as a standard to normalize and aggregate counts of views and downloads so that they can be interpreted and compared. Thanks to this, a number of repositories, including the generalist repositories in the NIH-funded GREI initiative, now regularly report normalized counts of interactions with datasets. Make Data Count also developed workflows to collect and aggregate citations to datasets. We are building on that work with the Data Citation Corpus, a large-scale open aggregate of data citations that currently hosts almost 10 million unique data citations and is substantially expanding our view into the uses of data as part of publications.

Data Citation Corpus dashboard: https://corpus.datacite.org/dashboard

Data evaluation in practice

Data evaluation is becoming a strategic priority for funders and institutions, and several organizations are already taking steps to assess the reach and impact of datasets. The GREI initiative includes implementation of open data metrics as one of its objectives. This group has implemented Make Data Count recommendations for data usage tracking, laying the groundwork so that NIH can better understand the use of the datasets they fund. A number of institutions are also taking steps to update their evaluation processes to recognize a broader range of research contributions and activities, including data.

To support these steps, Make Data Count has partnered with HELIOS Open to host the ‘Implementing data evaluation in academia’ Working Group which is developing resources to advance implementation of data evaluation as part of institutional processes, in particular hiring, review, promotion, and tenure. These resources include case studies of institutions that have incorporated data contributions as part of their evaluation processes, such as the Department of Psychology, University of Maryland, which updated the policies and processes for tenure, promotion and annual reviews so that datasets and other open outputs could be included in assessments of research productivity. If you are thinking about how to bring data evaluation conversations to your institution, we invite you to visit our resources for institutions, and to let us know what additional resources you’d like to see.

Data evaluation is already happening and there are many ways in which the community can engage so that we continue to shape and advance our infrastructure and practices for data usage. By embedding data in evaluation, we are opening up new ways to look at rigor and impact in research, and supporting more responsible research assessment.

Blog cross-posted at Make Data Count blog at 10.60804/hcfm-jx07.