Introduction to Project TARA

As more institutions begin to examine and refine their approach to academic assessment, there is growing awareness of the value of knowledge-sharing resources that support the development of new policies and practices. In light of this need, DORA is building an interactive online dashboard as part of Project TARA to monitor and track trends in responsible research assessment for faculty hiring and promotion at academic institutions.

We held a community workshop on November 19, 2021, to identify responsible research assessment practices and categorize source material for the dashboard. This event expanded on our first community call in September where we gathered early-stage input on intent and desired use of the dashboard.

Overview of the dashboard and discussion

Institutions are at different stages of readiness for research assessment reform and have implemented new practices in a variety of academic disciplines, career stages, and evaluation processes. The dashboard aims to capture the depth and breadth of progress made toward responsible research assessment and provide counter-mapping to common proxy measures of success (e.g., Journal Impact Factor (JIF), H-index, and university rankings). To do this, dashboard users will be able to search, visualize, and track university policies for faculty hiring, promotion, and tenure.

Participants identified faculty evaluation policies at academic institutions as good practice using the definition for responsible research assessment from the 2020 working paper from the Research on Research Institute (RoRI) as a guide. For example, the University of Minnesota’s Review Committee on Community-Engaged Scholarship assesses “the dossier of participating scholar/candidates who conduct community-engaged work, then produce a letter offering an evaluation of the quality and impact of the candidate’s community-engaged scholarship.”

“Approaches to assessment which incentivize, reflect and reward the plural characteristics of high-quality research, in support of diverse and inclusive research cultures.”

Responsible research assessment definition from The changing role of funders in responsible research assessment: progress, obstacles & the way ahead. RoRIWorking Paper No. 3., November 2020.

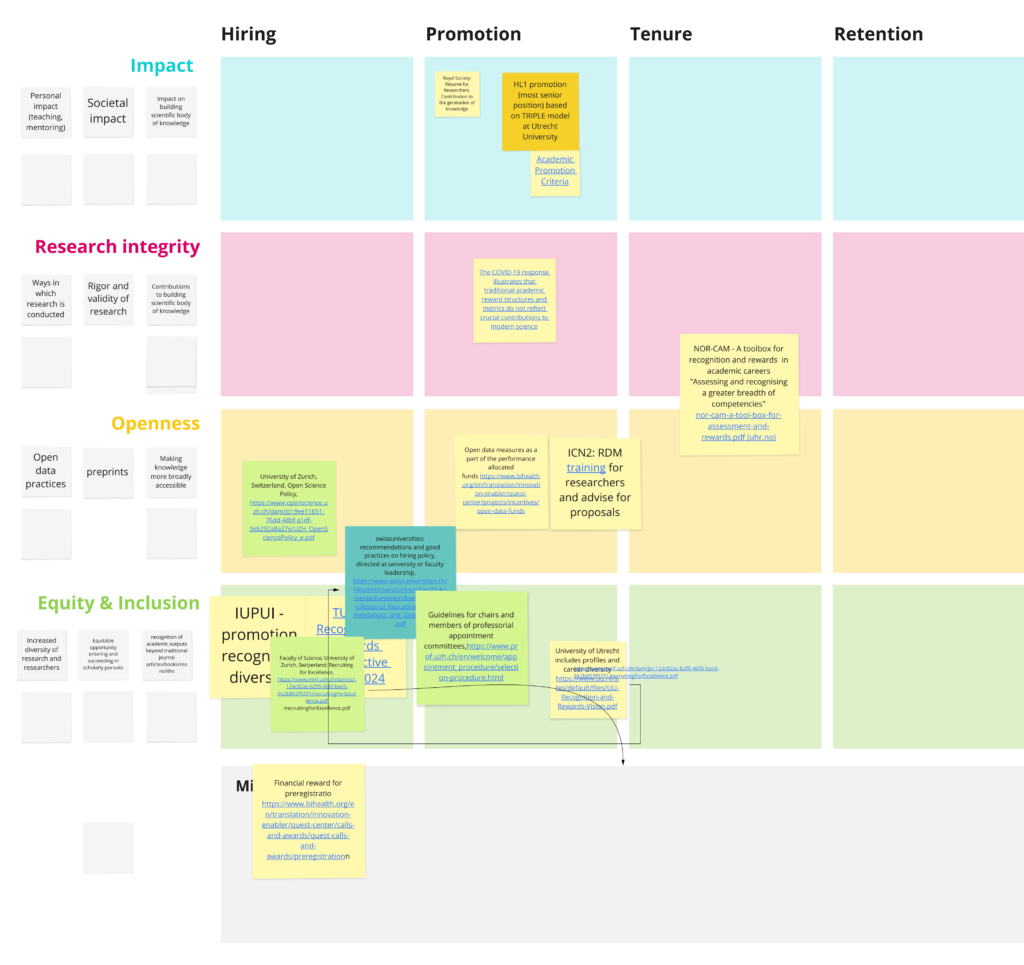

In addition to identifying good practice, participants were asked to explore and discuss how the practices fell into four broad categories: impact, research integrity, openness, and equity and inclusion. From this exercise, we heard that flexibility will need to be built into the dashboard to account for “cross-categorization” of policies and practices. For instance, the Academic Promotions Framework at the University of Bristol in the United Kingdom, which rewards the production of open research outputs like data, code, and preprints, was originally placed in the openness category. But that prompted the group to examine whether these were the best categories to use given how closely related openness is to research integrity. Likewise, participants had some difficulty placing policies to recognize public impact within the four categories. While such work may fit in the Impact category, it could also be applied across the other three. Indeed, many responsible research assessment policies could fall into multiple categories. In another example, the University of Zurich has assessment policies that include recognition of openness, leadership, and commitment to transparency in hiring or promotion. A flexible, broadly applicable system of categorization, such as meta-tagging, may prove to be a benefit because it allows policies to have multiple metatags.

Another key facet of the workshop discussion was that many academic institutions adopt national or international responsible academic initiatives. An example of this is the university-specific adoption of the nationally-developed Norwegian Career Assessment Matrix (NOR-CAM) or the Netherlands Recognition and Rewards Programme. National or regional context is also important to keep in mind given that organizations might be governmentally limited in how much they can alter their assessment policies. For example, the University of Catalonia has autonomy in the promotion and hiring of postdoctoral researchers, but is limited by external accreditation systems in its ability to change assessment practices for faculty.

Policies that focus on improving the ability of academic institutions to retain new faculty and staff (e.g., retention policies) were also seen as an important addition to the dashboard. It was pointed out that many of these policies focus on diversity and inclusion, given that many policies that make environments more supportive to minoritized faculty and staff also help with retention of those groups in academia. For example, Western Washington University in the United States created Best Practices: Recruiting & Retaining Faculty and Staff of Color, which offers suggestions and guidance such as making leadership opportunities available and keeping good data on why faculty of color leave universities to ensure that policy changes to entice them to stay are informed by data.

Participants also examined the distribution of the responsible academic assessment examples after categorization: Was there an over- or under-representation of examples in certain categories? Was there difficulty categorizing certain examples? Through this exercise, we hoped to gain insight into areas of high and low activity in the development of responsible research assessment policies and practices. For example, many of the policies identified by the group focused on faculty promotion, yet fewer policies were related to faculty hiring and retention. As the dashboard structure design evolves, we will work to ensure that while findings like this may point to a current lack of activity, highlighting and visualizing these gaps can also indicate potential opportunities to address oversights and galvanize new institutional activity to address them.

Finally, we heard about the importance of focusing on the process of assessing researchers rather than products, which was not strongly represented in the examples brought by participants. Such policies, like the Ghent University evaluation framework in Belgium, could provide staff and faculty with the flexibility to be assessed based on their accomplishments in-context with their own goals.

Next steps

The discussion from the workshop, in addition to the source material identified, will refine how we visualize and classify source material for the dashboard. Feedback on how well different policies “fit” into the four proposed categories will be used to inform the development of how different policies will be meta-tagged or classified to make them easy to find by users.

The next phase of dashboard development is to determine data structure and visualization. Our goal is to begin web development in the spring of 2022. Updates on dashboard progress and further opportunities for community engagement can be found on the TARA webpage.

The dashboard is one of the key outputs of Tools to Advance Research Assessment (TARA), which is a DORA project sponsored by Arcadia – a charitable fund of Lisbet Rausing and Peter Baldwin to facilitate the development of new policies and practices for academic career assessment.

Haley Hazlett is DORA’s Program Manager.