Introduction to the Project TARA toolkit

Since 2013, DORA has advocated a number of principles for responsible research evaluation, including avoiding the use of journal-based metrics as surrogate measures of research quality, recognizing a broader range of scholarly outputs in promotion, tenure, and review practices, and the importance of responsible research assessment as a mechanism to help address the structural inequalities in academia.

To help put these objectives into practice, DORA was awarded funding by Arcadia – a charitable foundation that works to protect nature, preserve cultural heritage, and promote open access to knowledge – in 2021 to support Tools to Advance Research Assessment (Project TARA). One of the outputs of Project TARA is a series of tools to support community advocacy for responsible research assessment policies and practices, created in collaboration with Ruth Schmidt, Associate Professor at the Institute of Design at the Illinois Institute of Technology and informed by internal and external community member input.

On October 25, 2022, we shared the first two tools in a community call that included researchers, publishers and faculties from across the world. In addition to introducing the new tools, the secondary aim of the call was to gather community feedback on future tool development for 2023. The session was moderated by Schmidt and Haley Hazlett, DORA’s Acting Program Director. DORA’s Policy Associates, Queen Saikia and Sudeepa Nandi assisted the session through chat moderation and technical support.

Feedback from the participants

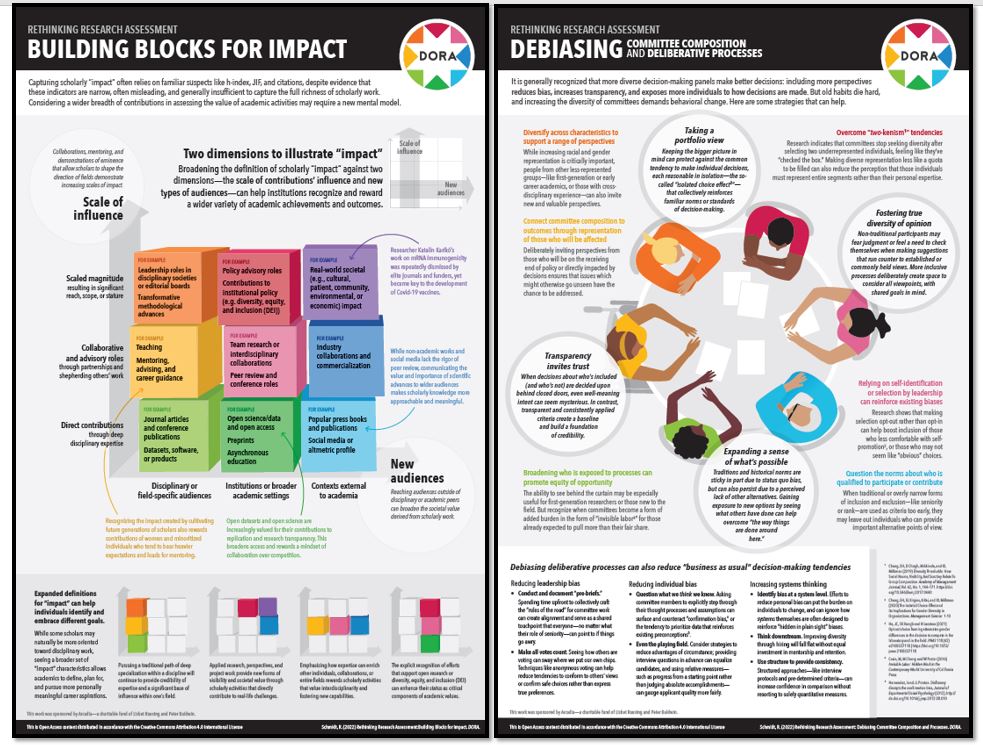

During the first half of the call, Schmidt introduced Debiasing Committee Composition and Deliberative Processes and Building Blocks for Impact. The creation of Debiasing Committee Composition and Deliberative Processes was prompted because, despite best intentions, a reliance on old decision making norms can still reinforce biases and existing power dynamics. Taking a more deliberate approach to the construction of committees can reduce the likelihood of biased outcomes. The Building Blocks for Impact tool was designed to stretch current mental models that focus primarily on traditional forms of scholarly impact (e.g., citations, grants). This tool introduces a framework of impact characteristics that reflect a wide range of different forms of scholarly contributions.

One of the key points of the community discussion was understanding who the tools were designed for. Schmidt highlighted that Debiasing Committee Composition and Deliberative Processes would be particularly useful for academic faculty and staff who participate in panels for hiring, promotion, tenure, or funding decisions. This tool outlines ideas for advocating transparency, decision-making based on portfolios, fostering a diversity of opinions that invite all viewpoints, and expanding possibilities beyond historical norms. Building Blocks for Impact is a model that outlines how a researchers’ contributions could be evaluated and considered impactful based on scale of influence and new audiences reached. For example, this model outlines that research with societal implications should be considered valid and impactful. Therefore, this tool would be particularly useful for academic faculty and staff seeking to better demonstrate the value of the different work they do, and for leadership seeking to include different forms of impact in hiring, promotion, and tenure policies and practices.

Discussing future tool ideas

During the second half of the call, Schmidt outlined early thinking on what the next set of Project TARA tools will focus on and opened the floor for questions and feedback from participants. The first future tool concept was an “expansion” of the Building Blocks for Impact tool. Such an expansion could contain, for instance, concrete examples of “impact in action”, practical strategies for adoption of broader definitions of “impact”, and visual frameworks. The second future tool concept centered on the fact that different career stages, including transitions into or out of academia, require different forms of support. After hearing the general overview, participants raised questions and provided feedback about the future tool ideas. This feedback fell under the following themes:

Representation: Promotion of a diverse intellectual community is essential for economic, scientific and societal progress in any organization. To this end, there was a general suggestion that the tools could be adapted for a more representative audience by incorporating Equity, Diversity, Inclusion and Accessibility principles.

Transparency: Some of the academics who joined the call highlighted the importance of transparency in hiring, retention and promotion policies. This tied into our second future tool concept to outline the broad range of career paths that researchers can follow. Sometimes decisions can be skewed due to unconscious bias, subjectivity, values or expertise of the evaluator. When evaluation practices are not transparent, these biases often go unnoticed or unchallenged. For more on this, see the Debiasing Committee Composition tool.

Advocacy: One of the participants highlighted the need for assessment principles that encourage new and unique ideas (i.e., ideas that are high risk and high reward). This will be important to consider when developing the 2023 tool that outlines concrete examples of recognizing impact in different contexts.

Inclusivity: Some participants highlighted the need for the academic evaluation system to be more inclusive, especially when it comes to showcasing “excellence” or success stories. Participants pointed out that academic “standards” should be more flexible and inclusive to the broad range of skills and experiences that researchers have to offer. It is also often the case that career paths are more nuanced and complex than a simple transition from PhD to postdoc to faculty. The other aspects of career pathways away from academia, which one might consider “non-traditional”, also require fair judgement from the evaluation panels. Relating to this, the feedback session ended with an interesting question from one of the participants: Does a robust history of work ensure a strong future impact?

Next steps

Feedback from the community call will be incorporated during the development of the future tools. We encourage you to let us know if you use or adapt Debiasing Committee Composition and Deliberative Processes and Building Blocks for Impact in your own assessment practices at info@sfdora.org.

Queen Saikia is DORA’s Policy Associate.